Posts tagged "haskell":

Validation of data in a servant server

I've been playing around with adding more validation of data received by an HTTP

endpoint in a servant server. Defining a type with a FromJSON instance is very

easy, just derive a Generic instance and it just works. Here's a simple

example

data Person = Person

{ name :: Text

, age :: Int

, occupation :: Occupation

}

deriving (Generic, Show)

deriving (FromJSON, ToJSON) via (Generically Person)

data Occupation = UnderAge | Student | Unemployed | SelfEmployed | Retired | Occupation Text

deriving (Eq, Generic, Ord, Show)

deriving (FromJSON, ToJSON) via (Generically Occupation)

However, the validation is rather limited, basically it's just checking that

each field is present and of the correct type. For the type above I'd like to

enforce some constraints for the combination of age and occupation.

The steps I thought of are

- Hide the default constructor and define a smart one. (This is the standard suggestion for placing extra constraints values.)

- Manually define the

FromJSONinstance using theGenericinstance to limit the amount of code and the smart constructor.

The smart constructor

I give the constructor the result type Either String Person to make sure it

can both be usable in code and when defining parseJSON.

mkPerson :: Text -> Int -> Occupation -> Either String Person

mkPerson name age occupation = do

guardE mustBeUnderAge

guardE notUnderAge

guardE tooOldToBeStudent

guardE mustBeRetired

pure $ Person name age occupation

where

guardE (pred, err) = when pred $ Left err

mustBeUnderAge = (age < 8 && occupation > UnderAge, "too young for occupation")

notUnderAge = (age > 15 && occupation == UnderAge, "too old to be under age")

tooOldToBeStudent = (age > 45 && occupation == Student, "too old to be a student")

mustBeRetired = (age > 65 && occupation /= Retired, "too old to not be retired")

Here I'm making use of Either e being a Monad and use when to apply the

constraints and ensure the reason for failure is given to the caller.

The FromJSON instance

When defining the instance I take advantage of the Generic instance to make

the implementation short and simple.

instance FromJSON Person where

parseJSON v = do

Person{name, age, occupation} <- genericParseJSON defaultOptions v

either fail pure $ mkPerson name age occupation

If there are many more fields in the type I'd consider using RecordWildCards.

Conclusion

No, it's nothing ground-breaking but I think it's a fairly nice example of how things can fit together in Haskell.

Reading Redis responses

When I began experimenting with writing a new Redis client package I decided to use lazy bytestrings, because:

- aeson seems to prefer it – the main encoding and decoding functions use lazy byte strings, though there are strict variants too.

- the

Buildertype in bytestring produce lazy bytestrings.

At the time I was happy to see that attoparsec seemed to support strict and lazy bytestrings equally well.

To get on with things I also wrote the simplest function I could come up with

for sending and receiving data over the network – I used send and recv from

Network.Socket.ByteString.Lazy in network. The function was really simple

import Network.Socket.ByteString.Lazy qualified as SB

sendCmd :: Conn -> Command r -> IO (Result r)

sendCmd (Conn p) (Command k cmd) = withResource p $ \sock -> do

_ <- SB.send sock $ toWireCmd cmd

resp <- SB.recv sock 4096

case decode resp of

Left err -> pure $ Left $ RespError "decode" (TL.pack err)

Right r -> pure $ k <$> fromWireResp cmd r

with decode defined like this

decode :: ByteString -> Either String Resp

decode = parseOnly resp

I knew I'd have to revisit this function, it was naïve to believe that a call to

recv would always result in as single complete response. It was however good

enough to get going. When I got to improving sendCmd I was a little surprised

to find that I'd also have to switch to using strict bytestrings in the parser.

Interlude on the Redis serialisation protocol (RESP3)

The Redis protocol has some defining attributes

- It's somewhat of a binary protocol. If you stick to keys and values that fall

within the set of ASCII strings, then the protocol is humanly readable and you

can rather easily use

netcatortelnetas a client. However, you aren't limited to storing only readable strings. - It's somewhat of a request-response protocol. A notable exception is the publish-subscribe subset, but it's rather small and I reckon most Redis users don't use it.

- It's somewhat of a type-length-value style protocol. Some of the data types include their length in bytes, e.g. bulk strings and verbatim strings. Other types include the number of elements, e.g. arrays and maps. A large number of them have no length at all, e.g. simple strings, integers, and doubles.

I suspect there are good reasons, I gather a lot of it has to do with speed. It does however cause one issue when writing a client: it's not possible to read a whole response without parsing it.

Rewriting sendCmd

With that extra information about the RESP3 protocol the naïve implementation above falls short in a few ways

- The read buffer may contain more than one full message and give the definition

of

decodeabove any remaining bytes are simply dropped.1 - The read buffer my contain less than one full message and then

decodewill return an error.2

Surely this must be solvable, because in my mind running the parser results in one of three things:

- Parsing is done and the result is returned, together with any input that wasn't consumed.

- The parsing is not done due to lack of input, this is typically encoded as a continuation.

- The parsing failed so the error is returned, together with input that wasn't consumed.

So, I started looking in the documentation for the module

Data.Attoparsec.ByteString.Lazy in attoparsec. I was a little surprised to find

that the Result type lacked a way to feed more input to a parser – it only

has two constructors, Done and Fail:

data Result r

= Fail ByteString [String] String

| Done ByteString r

I'm guessing the idea is that the function producing the lazy bytestring in the

first place should be able to produce more chunks of data on demand. That's

likely what the lazy variant of recv does, but at the same time it also

requires choosing a maximum length and that doesn't rhyme with RESP3. The lazy

recv isn't quite lazy in the way I needed it to be.

When looking at the parser for strict bytestrings I calmed down. This parser

follows what I've learned about parsers (it's not defined exactly like this;

it's parameterised in its input but for the sake of simplicity I show it with

ByteString as input):

data Result r

= Fail ByteString [String] String

| Partial (ByteString -> Result r)

| Done ByteString r

Then to my delight I found that there's already a function for handling exactly my problem

parseWith :: Monad m => (m ByteString) -> Parser a -> ByteString -> m (Result a)

I only needed to rewrite the existing parser to work with strict bytestrings and

work out how to write a function using recv (for strict bytestrings) that

fulfils the requirements to be used as the first argument to parseWith. The

first part wasn't very difficult due to the similarity between attoparsec's

APIs for lazy and strict bytestrings. The second only had one complication. It

turns out recv is blocking, but of course that doesn't work well with

parseWith. I wrapped it in timeout based on the idea that timing out means

there's no more data and the parser should be given an empty string so it

finishes. I also decided to pass the parser as an argument, so I could use the

same function for receiving responses for individual commands as well as for

pipelines. The full receiving function is

import Data.ByteString qualified as BS

import Data.Text qualified as T

import Network.Socket.ByteString qualified as SB

recvParse :: S.Socket -> Parser r -> IO (Either Text (BS.ByteString, r))

recvParse sock parser = do

parseWith receive parser BS.empty >>= \case

Fail _ [] err -> pure $ Left (T.pack err)

Fail _ ctxs err -> pure $ Left $ T.intercalate " > " (T.pack <$> ctxs) <> ": " <> T.pack err

Partial _ -> pure $ Left "impossible error"

Done rem result -> pure $ Right (rem, result)

where

receive =

timeout 100_000 (SB.recv sock 4096) >>= \case

Nothing -> pure BS.empty

Just bs -> pure bs

Then I only needed to rewrite sendCmd and I wanted to do it in such a way that

any remaining input data could be use in by the next call to sendCmd.3 I

settled for modifying the Conn type to hold an IORef ByteString together

with the socket and then the function ended up looking like this

sendCmd :: Conn -> Command r -> IO (Result r)

sendCmd (Conn p) (Command k cmd) = withResource p $ \(sock, remRef) -> do

_ <- SBL.send sock $ toWireCmd cmd

rem <- readIORef remRef

recvParse sock rem resp >>= \case

Left err -> pure $ Left $ RespError "recv/parse" err

Right (newRem, r) -> do

writeIORef remRef newRem

pure $ k <$> fromWireResp cmd r

What's next?

I've started looking into pub/sub, and basically all of the work described in this post is a prerequisite for that. It's not very difficult on the protocol level, but I think it's difficult to come up with a design that allows maximal flexibility. I'm not even sure it's worthwhile the complexity.

Footnotes:

This isn't that much of a problem when sticking to the request-response commands, I think. It most certainly becomes a problem with pub/sub though.

I'm sure that whatever size of buffer I choose to use there'll be someone out there who's storing values that are larger. Then there's pipelining that makes it even more of an issue.

To be honest I'm not totally convinced there'll ever be any remaining input.

Unless a single Conn is used by several threads – which would lead to much

pain with the current implementation – or pub/sub is used – which isn't

supported yet.

Finding a type for Redis commands

Arriving at a type for Redis commands required a bit of exploration. I had some ideas early on that I for various reasons ended up dropping on the way. This is a post about my travels, hopefully someone finds it worthwhile reading.

The protocol

The Redis Serialization Protocol (RESP) initially reminded me of JSON and I

thought that following the pattern of aeson might be a good idea. I decided

up-front that I'd only support the latest version of RESP, i.e. version 3. So, I

thought of a data type, Resp with a constructor for each RESP3 data type, and

a pair of type classes, FromResp and ToResp for converting between Haskell

types and RESP3. Then after some more reflection I realised that converting to

RESP is largely pointless. The main reason to convert anything to RESP3 is to

assemble a command, with its arguments, to send to Redis, but all commands are

arrays of bulk strings so it's unlikely that anyone will actually use

ToResp.1 So I scrapped the idea of ToResp. FromResp looked like this

class FromResp a where fromResp :: Value -> Either FromRespError a

When I started defining commands I didn't like the number of ByteString

arguments that resulted in, so I defined a data type, Arg, and an accompanying

type class for arguments, ToArg:

newtype Arg = Arg {unArg :: [ByteString]} deriving (Show, Semigroup, Monoid) class ToArg a where toArg :: a -> Arg

Later on I saw that it might also be nice to have a type class specifically for

keys, ToKey, though that's a wrapper for a single ByteString.

Implementing the functions to encode/decode the protocol were straight-forward

applications of attoparsec and bytestring (using its Builder).

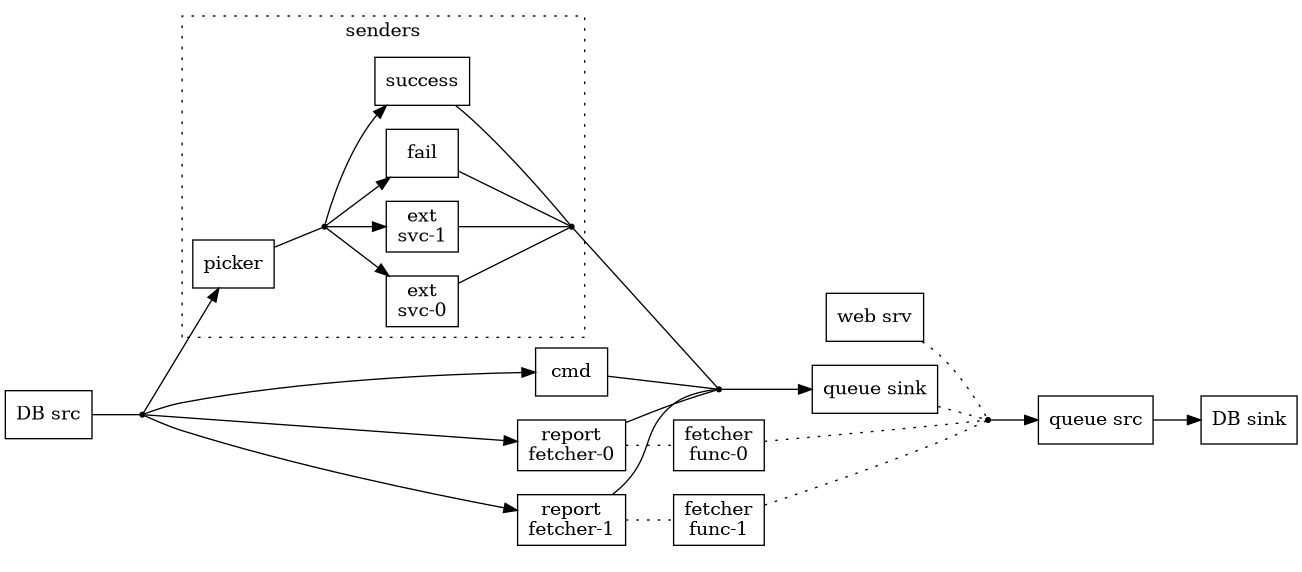

A command is a function in need of a sender

Even though supporting pipelining was one of the goals I felt a need to make sure I'd understood the protocol so I started off with single commands. The protocol is a simple request/response protocol at the core so I settled on this type for commands

type Cmd a = forall m. (Monad m) => (ByteString -> m ByteString) -> m (Either FromRespError a)

that is, a command is a function accepting a sender and returning an a.

I wrote a helper function for defining commands, sendCmd

sendCmd :: (Monad m, FromResp a) => [ByteString] -> (ByteString -> m ByteString) -> m (Either FromRespError a) sendCmd cmdArgs send = do let cmd = encode $ Array $ map BulkString cmdArgs send cmd <&> decode >>= \case Left desc -> pure $ Left $ FromRespError "Decode" (Text.pack desc) Right v -> pure $ fromValue v

which made it easy to define commands. Here are two examples, append and mget:

append :: (ToArg a, ToArg b) => a -> b -> Cmd Int append key val = sendCmd $ ["APPEND"] <> unArg (toArg key <> toArg val) -- | https://redis.io/docs/latest/commands/mget/ mget :: (ToArg a, FromResp b) => NE.NonEmpty a -> Cmd (NE.NonEmpty b) mget ks = sendCmd $ ["MGET"] <> unArg (foldMap1 toArg ks)

The function to send off a command and receive its response, sendAndRecieve,

was just a call to send followed by a call to recv in network (the variants

for lazy bytestrings).

I sort of liked this representation – there's always something pleasant with finding a way to represent something as a function. There's a very big problem with it though: it's difficult to implement pipelining!

Yes, Cmd is a functor since (->) r is a functor, and thus it's possible to

make it an Applicative, e.g. using free. However, to implement pipelining it's

necessary to

- encode all commands, then

- concatenate them all into a single bytestring and send it

- read the response, which is a concatenation of the individual commands' responses, and

- convert each separate response from RESP3.

That isn't easy when each command contains its own encoding and decoding. The sender function would have to relinquish control after encoding the command, and resume with the resume again later to decode it. I suspect it's doable using continuations, or monad-coroutine, but it felt complicated and rather than travelling down that road I asked for ideas on the Haskell Discourse. The replies lead me to a paper, Free delivery, and a bit later a package, monad-batcher. When I got the pointer to the package I'd already read the paper and started implementing the ideas in it, so I decided to save exploring monad-batcher for later.

A command for free delivery

The paper Free delivery is a perfect match for pipelining in Redis, and my understanding is that it proposes a solution where

- Commands are defined as a GADT,

Command a. - Two functions are defined to serialise and deserialise a

Command a. In the paper they useStringas the serialisation, soshowandreadis used. - A type,

ActionA a, is defined that combines a command with a modification of itsaresult. It implementsFunctor. - A free type,

FreeA f ais defined, and made into anApplicativewith the constraint thatfis aFunctor. - A function,

serializeA, is defined that traverses aFreeA ActionA aserialising each command. - A function,

deserializeA, is defined that traverses aFreeA ActionA adeserialising the response for each command.

I defined a command type, Command a, with only three commands in it, echo,

hello, and ping. I then followed the recipe above to verify that I could get

it working at all. The Haskell used in the paper is showing its age, and there

seems to be a Functor instance missing, but it was still straight forward and

I could verify that it worked against a locally running Redis.

Then I made a few changes…

I renamed the command type to Cmd so I could use Command for what the

paper calls ActionA.

data Cmd r where Echo :: Text -> Cmd Text Hello :: Maybe Int -> Cmd () Ping :: Maybe Text -> Cmd Text data Command a = forall r. Command !(r -> a) !(Cmd r) instance Functor Command where fmap f (Command k c) = Command (f . k) c toWireCmd :: Cmd r -> ByteString toWireCmd (Echo msg) = _ toWireCmd (Hello ver) = _ toWireCmd (Ping msg) = _ fromWireResp :: Cmd r -> Resp -> Either RespError r fromWireResp (Echo _) = fromResp fromWireResp (Hello _) = fromResp fromWireResp (Ping _) = fromResp

(At this point I was still using FromResp.)

I also replaced the free applicative defined in the paper and started using free. A couple of type aliases make it a little easier to write nice signatures

type Pipeline a = Ap Command a type PipelineResult a = Validation [RespError] a

and defining individual pipeline commands turned into something rather

mechanical. (I also swapped the order of the arguments to build a Command so I

can use point-free style here.)

liftPipe :: (FromResp r) => Cmd r -> Pipeline r liftPipe = liftAp . Command id echo :: Text -> Pipeline Text echo = liftPipe . Echo hello :: Maybe Int -> Pipeline () hello = liftPipe . Hello ping :: Maybe Text -> Pipeline Text ping = liftPipe . Ping

One nice thing with switching to free was that serialisation became very simple

toWirePipeline :: Pipeline a -> ByteString toWirePipeline = runAp_ $ \(Command _ c) -> toWireCmd c

On the other hand deserialisation became a little more involved, but it's not too bad

fromWirePipelineResp :: Pipeline a -> [Resp] -> PipelineResult a fromWirePipelineResp (Pure a) _ = pure a fromWirePipelineResp (Ap (Command k c) p) (r : rs) = fromWirePipelineResp p rs <*> (k <$> liftError singleton (fromWireResp c r)) fromWirePipelineResp _ _ = Failure [RespError "fromWirePipelineResp" "Unexpected wire result"]

Everything was working nicely and I started adding support for more commands. I used the small service from work to guide my choice of what commands to add. First out was del, then get and set. After adding lpush I was pretty much ready to try to replace hedis in the service from work.

data Cmd r where -- echo, hello, ping Del :: (ToKey k) => NonEmpty k -> Cmd Int Get :: (ToKey k, FromResp r) => k -> Cmd r Set :: (ToKey k, ToArg v) => k -> v -> Cmd Bool Lpush :: (ToKey k, ToArg v) => k -> NonEmpty v -> Cmd Int

However, when looking at the above definition started I thinking.

- Was it really a good idea to litter

Cmdwith constraints like that? - Would it make sense to keep the

Cmdtype a bit closer to the actual Redis commands? - Also, maybe

FromRespwasn't such a good idea after all, what if I remove it?

That brought me to the third version of the type for Redis commands.

Converging and simplifying

While adding new commands and writing instances of FromResp I slowly realised

that my initial thinking of RESP3 as somewhat similar to JSON didn't really pan

out. I had quickly dropped ToResp and now the instances of FromResp didn't

sit right with me. They obviously had to "follow the commands", so to speak, but

at the same time allow users to bring their own types. For instance, LSPUSH

returns the number of pushed messages, but at the same time GET should be able

to return an Int too. This led to Int's FromResp looking like this

instance FromResp Int where fromResp (BulkString bs) = case parseOnly (AC8.signed AC8.decimal) bs of Left s -> Left $ RespError "FromResp" (TL.pack s) Right n -> Right n fromResp (Number n) = Right $ fromEnum n fromResp _ = Left $ RespError "FromResp" "Unexpected value"

I could see this becoming worse, take the instance for Bool, I'd have to

consider that

- for

MOVEInteger 1meansTrueandInteger 0meansFalse - for

SETSimpleString "OK"meansTrue - users would justifiably expect a bunch of bytestrings to be

True, e.g.BulkString "true",BulkString "TRUE",BulkString "1", etc

However, it's impossible to cover all ways users can encode a Bool in a

ByteString so no matter what I do users will end up having to wrap their

Bool with newtype and implement a fitting FromResp. On top of that, even

thought I haven't found any example of it yet, I fully expect there to be,

somewhere in the large set of Redis commands, at least two commands each wanting

an instance of a basic type that simply can't be combined into a single

instance, meaning that the client library would need to do some newtype

wrapping too.

No, I really didn't like it! So, could I get rid of FromResp and still offer

users an API where they can user their own types as the result of commands?

To be concrete I wanted this

data Cmd r where -- other commands Get :: (ToKey k) => k -> Cmd (Maybe ByteString)

and I wanted the user to be able to conveniently turn a Cmd r into a Cmd s.

In other words, I wanted a Functor instance. Making Cmd itself a functor

isn't necessary and I just happened to already have a functor type that wraps

Cmd, the Command type I used for pipelining. If I were to use that I'd need

to write wrapper functions for each command though, but if I did that then I

could also remove the ToKey~/~ToArg constraints from the constructors of Cmd

r and put them on the wrapper instead. I'd get

data Cmd r where -- other commands Get :: Key -> Cmd (Maybe ByteString) get :: (ToKey k) => k -> Command (Maybe ByteString) get = Command id . Get . toKey

I'd also have to rewrite fromWireResp so it's more specific for each command.

Instead of

fromWireResp :: Cmd r -> Resp -> Either RespError r fromWireResp (Get _) = fromResp ...

I had to match up exactly on the possible replies to GET

fromWireResp :: Cmd r -> Resp -> Either RespError r fromWireResp _ (SimpleError err desc) = Left $ RespError (T.decodeUtf8 err) (T.decodeUtf8 desc) fromWireResp (Get _) (BulkString bs) = Right $ Just bs fromWireResp (Get _) Null = Right Nothing ... fromWireResp _ _ = Left $ RespError "fromWireResp" "Unexpected value"

Even though it was more code I liked it better than before, and I think it's slightly simpler code. I also hope it makes the use of the API is a bit simpler and clear.

Here's an example from the code for the service I wrote for work. It reads a UTC

timestamp stored in timeKey, the timestamp is a JSON string so it needs to be

decoded.

readUTCTime :: Connection -> IO (Maybe UTCTime) readUTCTime conn = sendCmd conn (maybe Nothing decode <$> get timeKey) >>= \case Left _ -> pure Nothing Right datum -> pure datum

What's next?

I'm pretty happy with the command type for now, though I have a feeling I'll

have to revisit Arg and ToArg at some point.

I've just turned the Connection type into a pool using resource-pool, and I

started looking at pub/sub. The latter thing, pub/sub, will require some thought

and experimentation I think. Quite possibly it'll end up in a post here too.

I also have a lot of commands to add.

Footnotes:

Of course one could use RESP3 as the serialisation format for storing values in Redis. Personally I think I'd prefer using something more widely used, and easier to read, such as JSON or BSON.

Why I'm writing a Redis client package

A couple of weeks ago I needed a small, hopefully temporary, service at work. It bridges a gap in functionality provided by a legacy system and the functionality desired by a new system. The legacy system is cumbersome to work with, so we tend to prefer building anti-corruption layers rather than changing it directly, and sometimes we implement it as separate services.

This time it was good enough to run the service as a cronjob, but it did need to keep track of when it ran the last time. It felt silly to spin up a separate DB just to keep a timestamp, and using another service's DB is something I really dislike and avoid.1 So, I ended up using the Redis instance that's used as a cache by a OSS service we host.

The last time I had a look at the options for writing a Redis client in Haskell I found two candidates, hedis and redis-io. At the time I wrote a short note about them. This time around I found nothing much has changed, they are still the only two contenders and they still suffer from the same issues

- hedis has still has the same API and I still find it as awkward.

- redis-io still requires a logger.

I once again decided to use hedis and wrote the service for work in a couple of days, but this time I thought I'd see what it would take to remove the requirement on tinylog from redis-io. I spent a few evenings on it, though I spent most time on "modernising" the dev setup, using Nix to build, re-format using fourmolu, etc. I did the same for redis-resp, the main dependency of redis-io. The result of that can be found on my gitlab account:

At the moment I won't take that particular experiment any further and given that the most recent change to redis-io was in 2020 (according to its git repo) I don't think there's much interest upstream either.

Making the changes to redis-io and redis-resp made me a little curious about the Redis protocol so I started reading about it. It made me start thinking about implementing a client lib myself. How hard could it be?

I'd also asked a question about Redis client libs on r/haskell and a response

led me to redis-schema. It has a very good README, and its section on

transactions with its observation that Redis transactions are a perfect match

for Applicative. This pushed me even closer to start writing a client lib.

What pushed me over the edge was the realisation that pipelining also is a

perfect match for Applicative.

For the last few weeks I've spent some of my free time reading and experimenting and I'm enjoying it very much. We'll see where it leads, but hopefully I'll at least have bit more to write about it.

Footnotes:

One definition of a microservice I find very useful is "a service that owns its own DB schema."

Using lens-aeson to implement FromJSON

At work I sometimes need to deal with large and deep JSON objects where I'm only

interested in a few of the values. If all the interesting values are on the top

level, then aeson have functions that make it easy to implement FromJSON's

parseJSON (Constructors and accessors), but if the values are spread out then

the functions in aeson come up a bit short. That's when I reach for lens-aeson,

as lenses make it very easy to work with large structures. However, I've found

that using its lenses to implement parseJSON become a lot easier with a few

helper functions.

Many of the lenses produces results wrapped in Maybe, so the first function is

one that transforms a Maybe a to a Parser a. Here I make use of Parser

implementing MonadFail.

infixl 8 <!> (<!>) :: (MonadFail m) => Maybe a -> String -> m a (<!>) mv err = maybe (fail err) pure mv

In some code I wrote this week I used it to extract the user name out of a JWT produced by Keycloak:

instance FromJSON OurClaimsSet where parseJSON = ... $ \o -> do cs <- parseJSON o n <- o ^? key "preferred_username" . _String <!> "preferred username missing" ... pure $ OurClaimsSet cs n ...

Also, all the lenses start with a Value and that makes the withX functions

in aeson to not be a perfect fit. So I define variations of the withX

functions, e.g.

withObjectV :: String -> (Value -> Parser a) -> Value -> Parser a withObjectV s f = withObject s (f . Object)

That makes the full FromJSON instance for OurClaimsSet look like this

instance FromJSON OurClaimsSet where parseJSON = withObjectV "OurClaimsSet" $ \o -> do cs <- parseJSON o n <- o ^? key "preferred_username" . _String <!> "name" let rs = o ^.. key "resource_access" . members . key "roles" . _Array . traverse . _String pure $ OurClaimsSet cs n rs

Servant and a weirdness in Keycloak

When writing a small tool to interface with Keycloak I found an endpoint that

require the content type to be application/json while the body should be plain

text. (The details are in the issue.) Since servant assumes that the content

type and the content match (I know, I'd always thought that was a safe

assumption to make too) it doesn't work with ReqBody '[JSON] Text. Instead I

had to create a custom type that's a combination of JSON and PlainText,

something that turned out to required surprisingly little code:

data KeycloakJSON deriving (Typeable) instance Accept KeycloakJSON where contentType _ = "application" // "json" instance MimeRender KeycloakJSON Text where mimeRender _ = fromStrict . encodeUtf8

The bug has already been fixed in Keycloak, but I'm sure there are other APIs with similar weirdness so maybe this will be useful to someone else.

Hoogle setup for local development

About a week ago I asked a question on the Nix Discourse about how to create a setup for Hoogle that

- includes the locally installed packages, and

- the package I'm working on, and ideally also

- have all local links, i.e. no links to Hackage.

I didn't get an answer there, but some people on the Nix Haskell channel on Matrix helped a bit, but it seems this particular use case requires a bit of manual work. The following commands get me an almost fully working setup:

cabal haddock --haddock-internal --haddock-quickjump --haddock-hoogle --haddock-html hoogle_dir=$(dirname $(dirname $(readlink -f $(which hoogle)))) hoogle generate --database=local.hoo \ $(for d in $(fd -L .txt ${hoogle_dir}); do printf "--local=%s " $(dirname $d); done) \ --local=./dist-newstyle/build/x86_64-linux/ghc-9.8.2/pkg-0.0.1/doc/html/pkg hoogle server --local --database=local.foo

What's missing is working links between the documentation of locally installed

packages. It looks like the links in the generated documention in Nix have a lot

of relative references containing ${pkgroot}/../../../../ which is what I

supect causes the broken links.

Nix, cabal, and tests

At work I decided to attempt to change the setup of one of our projects from using

to the triplet I tend to prefer

During this I ran into two small issues relating to tests.

hspec-discover both is, and isn't, available in the shell

I found mentions of this mentioned in an open cabal ticket and someone even made a git repo to explore it. I posted a question on the Nix discorse.

Basically, when running cabal test in a dev shell, started with nix develop,

the tool hspec-discover wasn't found. At the same time the packages was

installed

(ins)$ ghc-pkg list | rg hspec hspec-2.9.7 hspec-core-2.9.7 (hspec-discover-2.9.7) hspec-expectations-0.8.2

and it was on the $PATH

(ins)$ whereis hspec-discover hspec-discover: /nix/store/vaq3gvak92whk5l169r06xrbkx6c0lqp-ghc-9.2.8-with-packages/bin/hspec-discover /nix/store/986bnyyhmi042kg4v6d918hli32lh9dw-hspec-discover-2.9.7/bin/hspec-discover

The solution, as the user julm pointed out, is to simply do what cabal tells

you and run cabal update first.

Dealing with tests that won't run during build

The project's tests were set up in such a way that standalone tests and

integration tests are mixed into the same test executable. As the integration

tests need the just built service to be running they can't be run during nix

build. However, the only way of preventing that, without making code changes,

is to pass an argument to the test executable, --skip=<prefix>, and I believe

that's not possible when using developPackage. It's not a big deal though,

it's perfectly fine to run the tests separately using nix develop . command

.... However, it turns out developPackage and the underlying machinery is

smart enough to skip installing package required for testing when it's turned

off (using dontCheck). This is the case also when returnShellEnv is true.

Luckily it's not too difficult to deal with it. I already had a variable

isDevShell so I could simply reuse it and add the following expression to

modifier

(if isDevShell then hl.doCheck else hl.dontCheck)

Update to Hackage revisions in Nix

A few days after I published Hackage revisions in Nix I got a comment from

Wolfgang W that the next release of Nix will have a callHackageDirect with

support for specifying revisions.

The code in PR #284490 makes callHackageDirect accept a rev argument. Like

this:

haskellPackages.callHackageDirect { pkg = "openapi3"; ver = "3.2.3"; sha256 = "sha256-0F16o3oqOB5ri6KBdPFEFHB4dv1z+Pw6E5f1rwkqwi8="; rev = { revision = "4"; sha256 = "sha256-a5C58iYrL7eAEHCzinICiJpbNTGwiOFFAYik28et7fI="; }; } { }

That's a lot better than using overrideCabal!

Hackage revisions in Nix

Today I got very confused when using callHackageDirect to add the openapi3

package gave me errors like this

> Using Parsec parser > Configuring openapi3-3.2.3... > CallStack (from HasCallStack): > withMetadata, called at libraries/Cabal/Cabal/src/Distribution/Simple/Ut... > Error: Setup: Encountered missing or private dependencies: > base >=4.11.1.0 && <4.18, > base-compat-batteries >=0.11.1 && <0.13, > template-haskell >=2.13.0.0 && <2.20

When looking at its entry on Hackage those weren't the version ranges for the

dependencies. Also, running ghc-pkg list told me that I already had all

required packages at versions matching what Hackage said. So, what's actually

happening here?

It took me a while before remembering about revisions but once I did it was

clear that callHackageDirect always fetches the initial revision of a package

(i.e. it fetches the original tar-ball uploaded by the author). After realising

this it makes perfect sense – it's the only revision that's guaranteed to be

there and won't change. However, it would be very useful to be able to pick a

revision that actually builds.

I'm not the first one to find this, of course. It's been noted and written about

on the discource several years ago. What I didn't find though was a way to

influence what revision that's picked. It took a bit of rummaging around in the

nixpkgs code but finally I found two variables that's used in the Hackage

derivation to control this

revision- a string with the number of the revision, andeditedCabalFile- the SHA256 of the modified Cabal file.

Setting them is done using the overrideCabal function. This is a piece of my

setup for a modified set of Haskell packages:

hl = nixpkgs.haskell.lib.compose; hsPkgs = nixpkgs.haskell.packages.ghc963.override { overrides = newpkgs: oldpkgs: { openapi3 = hl.overrideCabal (drv: { revision = "4"; editedCabalFile = "sha256-a5C58iYrL7eAEHCzinICiJpbNTGwiOFFAYik28et7fI="; }) (oldpkgs.callHackageDirect { pkg = "openapi3"; ver = "3.2.3"; sha256 = "sha256-0F16o3oqOB5ri6KBdPFEFHB4dv1z+Pw6E5f1rwkqwi8="; } { });

It's not very ergonomic, and I think an extended version of callHackageDirect

would make sense.

Bending Warp

In the past I've noticed that Warp both writes to stdout at times and produces

some default HTTP responses, but I've never bothered taking the time to look up

what possibilities it offers to changes this behaviour. I've also always thought

that I ought to find out how Warp handles signals.

If you wonder why this would be interesting to know there are three main points:

- The environments where the services run are set up to handle structured

logging. In our case it should be JSONL written to

stdout, i.e. one JSON object per line. - We've decided that the error responses we produce in our code should be JSON, so it's irritating to have to document some special cases where this isn't true just because Warp has a few default error responses.

- Signal handling is, IMHO, a very important part of writing a service that runs well in k8s as it uses signals to handle the lifetime of pods.

Looking through the Warp API

Browsing through the API documentation for Warp it wasn't too difficult to find the interesting pieces, and that Warp follows a fairly common pattern in Haskell libraries

- There's a function called

runSettingsthat takes an argument of typeSettings. - The default settings are available in a variable called

defaultSettings(not very surprising). There are several functions for modifying the settings and they all have the same shape

setX :: X -> Settings -> Settings.

which makes it easy to chain them together.

- The functions I'm interested in now are

setOnException- the default handler,

defaultOnException, prints the exception tostdoutusing itsShowinstance setOnExceptionResponse- the default responses are produced by

defaultOnExceptionResponseand contain plain text response bodies setInstallShutdownHandler- the default behaviour is to wait for all ongoing requests and then shut done

setGracefulShutdownTimeout- sets the number of seconds to wait for ongoing requests to finnish, the default is to wait indefinitely

Some experimenting

In order to experiment with these I put together a small API using servant,

app, with a main function using runSettings and stringing together a bunch

of modifications to defaultSettings.

main :: IO () main = Log.withLogger $ \logger -> do Log.infoIO logger "starting the server" runSettings (mySettings logger defaultSettings) (app logger) Log.infoIO logger "stopped the server" where mySettings logger = myShutdownHandler logger . myOnException logger . myOnExceptionResponse

myOnException logs JSON objects (using the logging I've written about before,

here and here). It decides wether to log or not using

defaultShouldDisplayException, something I copied from defaultOnException.

myOnException :: Log.Logger -> Settings -> Settings myOnException logger = setOnException handler where handler mr e = when (defaultShouldDisplayException e) $ case mr of Nothing -> Log.warnIO logger $ lm $ "exception: " <> T.pack (show e) Just _ -> do Log.warnIO logger $ lm $ "exception with request: " <> T.pack (show e)

myExceptionResponse responds with JSON objects. It's simpler than

defaultOnExceptionResponse, but it suffices for my learning.

myOnExceptionResponse :: Settings -> Settings myOnExceptionResponse = setOnExceptionResponse handler where handler _ = responseLBS H.internalServerError500 [(H.hContentType, "application/json; charset=utf-8")] (encode $ object ["error" .= ("Something went wrong" :: String)])

Finally, myShutdownHandler installs a handler for SIGTERM that logs and then

shuts down.

myShutdownHandler :: Log.Logger -> Settings -> Settings myShutdownHandler logger = setInstallShutdownHandler shutdownHandler where shutdownAction = Log.infoIO logger "closing down" shutdownHandler closeSocket = void $ installHandler sigTERM (Catch $ shutdownAction >> closeSocket) Nothing

Conclusion

I really ought to have looked into this sooner, especially as it turns out that

Warp offers all the knobs and dials I could wish for to control these aspects of

its behaviour. The next step is to take this and put it to use in one of the

services at $DAYJOB

Getting Amazonka S3 to work with localstack

I'm writing this in case someone else is getting strange errors when trying to use amazonka-s3 with localstack. It took me rather too long finding the answer and neither the errors I got from Amazonka nor from localstack were very helpful.

The code I started with for setting up the connection looked like this

main = do awsEnv <- AWS.overrideService localEndpoint <$> AWS.newEnv AWS.discover -- do S3 stuff where localEndpoint = AWS.setEndpoint False "localhost" 4566

A few years ago, when I last wrote some Haskell to talk to S3 this was enough1, but now I got some strange errors.

It turns out there are different ways to address buckets and the default, which

is used by AWS itself, isn't used by localstack. The documentation of

S3AddressingStyle has more details.

So to get it to work I had to change the S3 addressing style as well and ended up with this code instead

main = do awsEnv <- AWS.overrideService (s3AddrStyle . localEndpoint) <$> AWS.newEnv AWS.discover -- do S3 stuff where localEndpoint = AWS.setEndpoint False "localhost" 4566 s3AddrStyle svc = svc {AWS.s3AddressingStyle = AWS.S3AddressingStylePath}

Footnotes:

That was before version 2.0 of Amazonka, so it did look slightly different, but overriding the endpoint was all that was needed.

Defining a formatter for Cabal files

For Haskell code I can use lsp-format-buffer and lsp-format-region to keep

my file looking nice, but I've never found a function for doing the same for

Cabal files. There's a nice command line tool, cabal-fmt, for doing it, but it

means having to jump to a terminal. It would of course be nicer to satisfy my

needs for aesthetics directly from Emacs. A few times I've thought of writing

the function myself, I mean how hard can it be? But then I've forgotten about it

until then next time I'm editing a Cabal file.

A few days ago I noticed emacs-reformatter popping up in my feeds. That removed all reasons to procrastinate. It turned out to be very easy to set up.

The package doesn't have a recipe for straight.el so it needs a :straight

section. Also, the naming of the file in the package doesn't fit the package

name, hence the slightly different name in the use-package declaration:1

(use-package reformatter :straight (:host github :repo "purcell/emacs-reformatter"))

Now the formatter can be defined

(reformatter-define cabal-format :program "cabal-fmt" :args '("/dev/stdin"))

in order to create functions for formatting, cabal-format-buffer and

cabal-format-region, as well as a minor mode for formatting on saving a Cabal

file.

Footnotes:

I'm sure it's possible to use :files to deal with this, but I'm not sure

how and my naive guess failed. It's OK to be like this until I figure it out

properly.

Some practical Haskell

As I'm nearing the end of my time with my current employer I thought I'd put together some bits of practical Haskell that I've put into production. We only have a few services in Haskell, and basically I've had to sneak them into production. I'm hoping someone will find something useful. I'd be even happier if I get pointers on how to do this even better.

Logging

I've written about that earlier in three posts:

Final exception handler

After reading about the uncaught exception handler in Serokell's article I've added the following snippet to all the services.

main :: IO () main = do ... originalHandler <- getUncaughtExceptionHandler setUncaughtExceptionHandler $ handle originalHandler . lastExceptionHandler logger ... lastExceptionHandler :: Logger -> SomeException -> IO () lastExceptionHandler logger e = do fatalIO logger $ lm $ "uncaught exception: " <> displayException e

Handling signals

To make sure the platform we're running our services on is happy with a service

it needs to handle SIGTERM, and when running it locally during development,

e.g. for manual testing, it's nice if it also handles SIGINT.

The following snippet comes from a service that needs to make sure that every

iteration of its processing is completed before shutting down, hence the IORef

that's used to signal whether procession should continue or not.

main :: IO () main = do ... cont <- newIORef True void $ installHandler softwareTermination (Catch $ sigHandler logger cont) Nothing void $ installHandler keyboardSignal (Catch $ sigHandler logger cont) Nothing ... sigHandler :: Logger -> IORef Bool -> IO () sigHandler logger cont = do infoIO logger "got a signal, shutting down" writeIORef cont False

Probes

Due to some details about how networking works in our platform it's currently not possible to use network-based probing. Instead we have to use files. There are two probes that are of interest

- A startup probe, existance of the file signals that the service has started as is about being processing.

- A progress probe, a timestamp signals the time the most recent iteration of processing finished1.

I've written a little bit about the latter before in A little Haskell: epoch timestamp, but here I'm including both functions.

createPidFile :: FilePath -> IO () createPidFile fn = getProcessID >>= writeFile fn . show writeTimestampFile :: MonadIO m => FilePath -> m () writeTimestampFile fn = liftIO $ do getPOSIXTime >>= (writeFile fn . show) . truncate @_ @Int64 . (* 1000)

Footnotes:

The actual probing is then done using a command that compares the saved timestamp with the current time. As long as the difference is smaller than a threshold the probe succeeds.

Making an Emacs major mode for Cabal using tree-sitter

A few days ago I posted on r/haskell that I'm attempting to put together a Cabal grammar for tree-sitter. Some things are still missing, but it covers enough to start doing what I initially intended: experiment with writing an alternative Emacs major mode for Cabal.

The documentation for the tree-sitter integration is very nice, and several of

the major modes already have tree-sitter variants, called X-ts-mode where X

is e.g. python, so putting together the beginning of a major mode wasn't too

much work.

Configuring Emacs

First off I had to make sure the parser for Cabal was installed. The snippet for that looks like this1

(use-package treesit :straight nil :ensure nil :commands (treesit-install-language-grammar) :init (setq treesit-language-source-alist '((cabal . ("https://gitlab.com/magus/tree-sitter-cabal.git")))))

With that in place the parser is installed using M-x

treesit-install-language-grammar and choosing cabal.

After that I removed my configuration for haskell-mode and added the following

snippet to get my own major mode into my setup.

(use-package my-cabal-mode :straight (:type git :repo "git@gitlab.com:magus/my-emacs-pkgs.git" :branch "main" :files (:defaults "my-cabal-mode/*el")))

The major mode and font-locking

The built-in elisp documentation actually has a section on writing a major mode with tree-sitter, so it was easy to get started. Setting up the font-locking took a bit of trial-and-error, but once I had comments looking the way I wanted it was easy to add to the setup. Oh, and yes, there's a section on font-locking with tree-sitter in the documentation too. At the moment it looks like this

(defvar cabal--treesit-font-lock-setting (treesit-font-lock-rules :feature 'comment :language 'cabal '((comment) @font-lock-comment-face) :feature 'cabal-version :language 'cabal '((cabal_version _) @font-lock-constant-face) :feature 'field-name :language 'cabal '((field_name) @font-lock-keyword-face) :feature 'section-name :language 'cabal '((section_name) @font-lock-variable-name-face)) "Tree-sitter font-lock settings.") ;;;###autoload (define-derived-mode my-cabal-mode fundamental-mode "My Cabal" "My mode for Cabal files" (when (treesit-ready-p 'cabal) (treesit-parser-create 'cabal) ;; set up treesit (setq-local treesit-font-lock-feature-list '((comment field-name section-name) (cabal-version) () ())) (setq-local treesit-font-lock-settings cabal--treesit-font-lock-setting) (treesit-major-mode-setup))) ;;;###autoload (add-to-list 'auto-mode-alist '("\\.cabal\\'" . my-cabal-mode))

Navigation

One of the reasons I want to experiment with tree-sitter is to use it for code

navigation. My first attempt is to translate haskell-cabal-section-beginning

(in haskell-mode, the source) to using tree-sitter. First a convenience

function to recognise if a node is a section or not

(defun cabal--node-is-section-p (n) "Predicate to check if treesit node N is a Cabal section." (member (treesit-node-type n) '("benchmark" "common" "executable" "flag" "library" "test_suite")))

That makes it possible to use treesit-parent-until to traverse the nodes until

hitting a section node

(defun cabal-goto-beginning-of-section () "Go to the beginning of the current section." (interactive) (when-let* ((node-at-point (treesit-node-at (point))) (section-node (treesit-parent-until node-at-point #'cabal--node-is-section-p)) (start-pos (treesit-node-start section-node))) (goto-char start-pos)))

And the companion function, to go to the end of a section is very similar

(defun cabal-goto-end-of-section () "Go to the end of the current section." (interactive) (when-let* ((node-at-point (treesit-node-at (point))) (section-node (treesit-parent-until node-at-point #'cabal--node-is-section-p)) (end-pos (treesit-node-end section-node))) (goto-char end-pos)))

Footnotes:

I'm using straight.el and use-package in my setup, but hopefully the

snippets can easily be converted to other ways of configuring Emacs.

Logging with class

In two previous posts I've described how I currently compose log messages and how I do the actual logging. This post wraps up this particular topic for now with a couple of typeclasses, a default implementation, and an example showing how I use them.

The typeclasses

First off I want a monad for the logging itself. It's just a collection of

functions taking a LogMsg and returning unit (in a monad).

class Monad m => LoggerActions m where debug :: LogMsg -> m () info :: LogMsg -> m () warn :: LogMsg -> m () err :: LogMsg -> m () fatal :: LogMsg -> m ()

In order to provide a default implementation I also need a way to extract the logger itself.

class Monad m => HasLogger m where getLogger :: m Logger

Default implementation

Using the two typeclasses above it's now possible to define a type with an

implementation of LoggerActions that is usable with deriving

via.

newtype StdLoggerActions m a = MkStdZLA (m a) deriving (Functor, Applicative, Monad, MonadIO, HasLogger)

And its implementattion of LoggerActions looks like this:

instance (HasLogger m, MonadIO m) => LoggerActions (StdLoggerActions m) where debug msg = getLogger >>= flip debugIO msg info msg = getLogger >>= flip infoIO msg warn msg = getLogger >>= flip warnIO msg err msg = getLogger >>= flip errIO msg fatal msg = getLogger >>= flip fatalIO msg

An example

Using the definitions above is fairly straight forward. First a type the derives

its implementaiton of LoggerActions from StdLoggerActions.

newtype EnvT a = EnvT {runEnvT :: ReaderT Logger IO a} deriving newtype (Functor, Applicative, Monad, MonadIO, MonadReader Logger) deriving (LoggerActions) via (StdLoggerActions EnvT)

In order for it to work, and compile, it needs an implementation of HasLogger too.

instance HasLogger EnvT where getLogger = ask

All that's left is a function using a constraint on LoggerActions (doStuff)

and a main function creating a logger, constructing an EnvT, and then

running doStuff in it.

doStuff :: LoggerActions m => m () doStuff = do debug "a log line" info $ "another log line" #+ ["extras" .= (42 :: Int)] main :: IO () main = withLogger $ \logger -> runReaderT (runEnvT doStuff) logger

A take on logging

In my previous post I described a type, with instances and a couple of useful

functions for composing log messages. To actually make use of that there's a bit

more needed, i.e. the actual logging. In this post I'll share that part of the

logging setup I've been using in the Haskell services at $DAYJOB.

The logger type

The logger will be a wrapper around fast-logger's FastLogger, even though

that's not really visible.

newtype Logger = Logger (LogMsg -> IO ())

It's nature as a wrapper makes it natural to follow the API of fast-logger, with

some calls to liftIO added.

newLogger :: MonadIO m => m (Logger, m ()) newLogger = liftIO $ do (fastLogger, cleanUp) <- newFastLogger $ LogStdout defaultBufSize pure (Logger (fastLogger . toLogStr @LogMsg), liftIO cleanUp)

The implementation of withLogger is pretty much a copy of what I found in

fast-logger, just adapted to the newLogger above.

withLogger :: (MonadMask m, MonadIO m) => (Logger -> m ()) -> m () withLogger go = bracket newLogger snd (go . fst)

Logging functions

All logging functions will follow the same pattern so it's easy to break out the common parts.

logIO :: MonadIO m => Text -> Logger -> LogMsg -> m () logIO lvl (Logger ls) msg = do t <- formatTime defaultTimeLocale "%y-%m-%dT%H:%M:%S%03QZ" <$> liftIO getCurrentTime let bmsg = "" :# [ "correlation-id" .= ("no-correlation-id" :: Text) , "timestamp" .= t , "level" .= lvl ] liftIO $ ls $ bmsg <> msg

With that in place the logging functions become very short and sweet.

debugIO, infoIO, warnIO, errIO, fatalIO :: MonadIO m => Logger -> LogMsg -> m () debugIO = logIO "debug" infoIO = logIO "info" warnIO = logIO "warn" errIO = logIO "error" fatalIO = logIO "fatal"

Simple example of usage

A very simple example showing how it could be used would be something like this

main :: IO () main = withLogger $ \logger -> do debugIO logger "a log line" infoIO logger $ "another log line" #+ ["extras" .= (42 :: Int)]

A take on log messages

At $DAYJOB we use structured logging with rather little actual structure, the only rules are

- Log to

stdout. - Log one JSON object per line.

- The only required fields are

message- a human readable string describing the eventlevel- the severity of the event,debug,info,warn,error, orfatal.timestamp- the time of the eventcorrelation-id- an ID passed between services to allow to find related events

Beyond that pretty much anything goes, any other fields that are useful in that service, or even in that one log message is OK.

My first take was very ad-hoc, mostly becuase there were other parts of the question "How do I write a service in Haskell, actually?" that needed more attention – then I read Announcing monad-logger-aeson: Structured logging in Haskell for cheap. Sure, I'd looked at some of the logging libraries on Hackage but not really found anything that seemed like it would fit very well. Not until monad-logger-aeson, that is. Well, at least until I realised it didn't quite fit the rules we have.

It did give me some ideas of how to structure my current rather simple, but very awkward to use, current loggging code. This is what I came up with, and after using it in a handful services I find it kind of nice to work with. Let me know what you think.

The log message type

I decided that a log message must always contain the text describing the event. It's the one thing that's sure to be known at the point where the developer writes the code to log an event. All the other mandatory parts can, and probably should as far as possible, be added by the logging library itself. So I ended up with this type.

data LogMsg = Text :# [Pair] deriving (Eq, Show)

It should however be easy to add custom parts at the point of logging, so I added an operator for that.

(#+) :: LogMsg -> [Pair] -> LogMsg (#+) (msg :# ps0) ps1 = msg :# (ps0 <> ps1)

The ordering is important, i.e. ps0 <> ps1, as aeson's object function will

take the last value for a field and I want to be able to give keys in a log

message new values by overwriting them later on.

Instances to use it with fast-logger

The previous logging code used fast-logger and it had worked really well so I

decided to stick with it. Making LogMsg and instance of ToLogStr is key, and

as the rules require logging of JSON objects it also needs to be an instance of

ToJSON.

instance ToJSON LogMsg where toJSON (msg :# ps) = object $ ps <> ["message" .= msg] instance ToLogStr LogMsg where toLogStr msg = toLogStr (encode msg) <> "\n"

Instance to make it easy to log a string

It's common to just want to log a single string and nothing else, so it's handy

if LogMsg is an instance of IsString.

instance IsString LogMsg where fromString msg = pack msg :# []

Combining log messages

When writing the previous logging code I'd regularly felt pain from the lack of

a nice way to combine log messages. With the definition of LogMsg above it's

not difficult to come up with reasonable instances for both Semigroup and

Monoid.

instance Semigroup LogMsg where "" :# ps0 <> msg1 :# ps1 = msg1 :# (ps0 <> ps1) msg0 :# ps0 <> "" :# ps1 = msg0 :# (ps0 <> ps1) msg0 :# ps0 <> msg1 :# ps1 = (msg0 <> " - " <> msg1) :# (ps0 <> ps1) instance Monoid LogMsg where mempty = ""

In closing

What's missing above is the automatic handling of the remaining fields. I'll try to get back to that part soon. For now I'll just say that the log message API above made the implementation nice and straight forward.

Composing instances using deriving via

Today I watched the very good, and short, video from Tweag on how to Avoid boilerplate instances with -XDerivingVia. It made me realise that I've read about this before, but then the topic was on reducing boilerplate with MTL-style code.

Given that I'd forgotten about it I'm writing this mostly as a note to myself.

The example from the Tweag video, slightly changed

The code for making film ratings into a Monoid, when translated to the UK,

would look something like this:

{-# LANGUAGE DerivingVia #-}

{-# LANGUAGE GeneralizedNewtypeDeriving #-}

module DeriveMonoid where

newtype Supremum a = MkSup a

deriving stock (Bounded, Eq, Ord)

deriving newtype (Show)

instance Ord a => Semigroup (Supremum a) where

(<>) = max

instance (Bounded a, Ord a) => Monoid (Supremum a) where

mempty = minBound

data FilmClassification

= Universal

| ParentalGuidance

| Suitable12

| Suitable15

| Adults

| Restricted18

deriving stock (Bounded, Eq, Ord)

deriving (Monoid, Semigroup) via (Supremum FilmClassification)

Composing by deriving

First let's write up a silly class for writing to stdout, a single operation will do.

class Monad m => StdoutWriter m where writeStdoutLn :: String -> m ()

Then we'll need a type to attach the implementation to.

newtype SimpleStdoutWriter m a = SimpleStdoutWriter (m a) deriving (Functor, Applicative, Monad, MonadIO)

and of course an implementation

instance MonadIO m => StdoutWriter (SimpleStdoutWriter m) where writeStdoutLn = liftIO . putStrLn

Now let's create an app environment based on ReaderT and use deriving via to

give it an implementation of StdoutWriter via SimpleStdoutWriter.

newtype AppEnv a = AppEnv {unAppEnv :: ReaderT Int IO a} deriving ( Functor , Applicative , Monad , MonadIO , MonadReader Int ) deriving (StdoutWriter) via (SimpleStdoutWriter AppEnv)

Then a quick test to show that it actually works.

λ> runReaderT (unAppEnv $ writeStdoutLn "hello, world!") 0 hello, world!

Patching in Nix

Today I wanted to move one of my Haskell projects to GHC 9.2.4 and found that

envy didn't compile due to an upper bound on its dependency on bytestring, it

didn't allow 0.11.*.

After creating a PR I decided I didn't want to wait for upstream so instead I

started looking into options for patching the source of a derivation of a

package from Hackage. In the past I've written about building Haskell packages

from GitHub and an older one were I used callHackageDirect to build Haskell

packages from Hackage. I wasn't sure how to patch up a package from Hackage

though, but after a bit of digging through haskell-modules I found appendPatch.

The patch wasn't too hard to put together once I recalled the name of the patch

queue tool I used regularly years ago, quilt. I put the resulting patch in the

nix folder I already had, and the full override ended up looking like this

... hl = haskell.lib; hsPkgs = haskell.packages.ghc924; extraHsPkgs = hsPkgs.override { overrides = self: super: { envy = hl.appendPatch (self.callHackageDirect { pkg = "envy"; ver = "2.1.0.0"; sha256 = "sha256-yk8ARRyhTf9ImFJhDnVwaDiEQi3Rp4yBvswsWVVgurg="; } { }) ./nix/envy-fix-deps.patch; }; }; ...

A little Haskell: epoch timestamp

A need of getting the current UNIX time is something that comes up every now and then. Just this week I needed it in order to add a k8s liveness probe1.

While it's often rather straight forward to get the Unix time as an integer in other languages2, in Haskell there's a bit of type tetris involved.

- getPOSIXTime gives me a POSIXTime, which is an alias for NominalDiffTime.

NominalDiffTimeimplements RealFrac and can thus be converted to anything implementing Integral (I wanted it asInt64).NominalDiffTimealso implements Num, so if the timestamp needs better precision than seconds it's easy to do (I needed milliseconds).

The combination of the above is something like

truncate <$> getPOSIXTime

In my case the full function of writing the timestamp to a file looks like this

writeTimestampFile :: MonadIO m => Path Abs File -> m () writeTimestampFile afn = liftIO $ do truncate @_ @Int64 . (* 1000) <$> getPOSIXTime >>= writeFile (fromAbsFile afn) . show

Footnotes:

Over the last few days I've looked into k8s probes. Since we're using Istio TCP probes are of very limited use, and as the service in question doesn't offer an HTTP API I decided to use a liveness command that checks that the contents of a file is a sufficiently recent epoch timestamp.

Rust's Chrono package has Utc.timestamp(t). Python has time.time(). Golang has Time.Unix.

Simple nix flake for Haskell development

Recently I've moved over to using flakes in my Haskell development projects. It took me a little while to arrive at a pattern a flake for Haskell development that I like. I'm hoping sharing it might help others when doing the same change

{ inputs = { nixpkgs.url = "github:nixos/nixpkgs"; flake-utils.url = "github:numtide/flake-utils"; }; outputs = { self, nixpkgs, flake-utils }: flake-utils.lib.eachDefaultSystem (system: with nixpkgs.legacyPackages.${system}; let t = lib.trivial; hl = haskell.lib; name = "project-name"; project = devTools: # [1] let addBuildTools = (t.flip hl.addBuildTools) devTools; in haskellPackages.developPackage { root = lib.sourceFilesBySuffices ./. [ ".cabal" ".hs" ]; name = name; returnShellEnv = !(devTools == [ ]); # [2] modifier = (t.flip t.pipe) [ addBuildTools hl.dontHaddock hl.enableStaticLibraries hl.justStaticExecutables hl.disableLibraryProfiling hl.disableExecutableProfiling ]; }; in { packages.pkg = project [ ]; # [3] defaultPackage = self.packages.${system}.pkg; devShell = project (with haskellPackages; [ # [4] cabal-fmt cabal-install haskell-language-server hlint ]); }); }

The main issue I ran into is getting a development shell out of

haskellPackages.developPackage, it requires returnShellEnv to be true.

Something that isn't too easy to find out. This means that the only solution

I've found to getting a development shell is to have separate expressions for

building and getting a shell. In the above flake the build expression, [3],

passes an empty list of development tools, the argument devTools at [1],

while the development shell expression, [4], passes in a list of tools needed

for development only. The decision of whether the expression is for building or

for a development shell, [2], then looks at the list of development tools

passed in.

Fallback of actions

In a tool I'm writing I want to load a file that may reside on the local disk, but if it isn't there I want to fetch it from the web. Basically it's very similar to having a cache and dealing with a miss, except in my case I don't populate the cache.

Let me first define the functions to play with

loadFromDisk :: String -> IO (Either String Int) loadFromDisk k@"bad key" = do putStrLn $ "local: " <> k pure $ Left $ "no such local key: " <> k loadFromDisk k = do putStrLn $ "local: " <> k pure $ Right $ length k loadFromWeb :: String -> IO (Either String Int) loadFromWeb k@"bad key" = do putStrLn $ "web: " <> k pure $ Left $ "no such remote key: " <> k loadFromWeb k = do putStrLn $ "web: " <> k pure $ Right $ length k

Discarded solution: using the Alternative of IO directly

It's fairly easy to get the desired behaviour but Alternative of IO is based

on exceptions which doesn't strike me as a good idea unless one is using IO

directly. That is fine in a smallish application, but in my case it makes sense

to use tagless style (or ReaderT pattern) so I'll skip exploring this option

completely.

First attempt: lifting into the Alternative of Either e

There's an instance of Alternative for Either e in version 0.5 of

transformers. It's deprecated and it's gone in newer versions of the library as

one really should use Except or ExceptT instead. Even if I don't think it's

where I want to end up, it's not an altogether bad place to start.

Now let's define a function using liftA2 (<|>) to make it easy to see what the

behaviour is

fallBack :: Applicative m => m (Either String res) -> m (Either String res) -> m (Either String res) fallBack = liftA2 (<|>)

λ> loadFromDisk "bad key" `fallBack` loadFromWeb "good key" local: bad key web: good key Right 8 λ> loadFromDisk "bad key" `fallBack` loadFromWeb "bad key" local: bad key web: bad key Left "no such remote key: bad key"

The first example shows that it falls back to loading form the web, and the

second one shows that it's only the last failure that survives. The latter part,

that only the last failure survives, isn't ideal but I think I can live with

that. If I were interested in collecting all failures I would reach for

Validation from validation-selective (there's one in validation that

should work too).

So far so good, but the next example shows a behaviour I don't want

λ> loadFromDisk "good key" `fallBack` loadFromWeb "good key" local: good key web: good key Right 8

or to make it even more explicit

λ> loadFromDisk "good key" `fallBack` undefined local: good key *** Exception: Prelude.undefined CallStack (from HasCallStack): error, called at libraries/base/GHC/Err.hs:79:14 in base:GHC.Err undefined, called at <interactive>:451:36 in interactive:Ghci4

There's no short-circuiting!1

The behaviour I want is of course that if the first action is successful, then the second action shouldn't take place at all.

It looks like either <|> is strict in its second argument, or maybe it's

liftA2 that forces it. I've not bothered digging into the details, it's enough

to observe it to realise that this approach isn't good enough.

Second attempt: cutting it short, manually

Fixing the lack of short-circuiting the evaluation after the first success isn't too difficult to do manually. Something like this does it

fallBack :: Monad m => m (Either String a) -> m (Either String a) -> m (Either String a) fallBack first other = do first >>= \case r@(Right _) -> pure r r@(Left _) -> (r <|>) <$> other

It does indeed show the behaviour I want

λ> loadFromDisk "bad key" `fallBack` loadFromWeb "good key" local: bad key web: good key Right 8 λ> loadFromDisk "bad key" `fallBack` loadFromWeb "bad key" local: bad key web: bad key Left "no such remote key: bad key" λ> loadFromDisk "good key" `fallBack` undefined local: good key Right 8

Excellent! And to switch over to use Validation one just have to switch

constructors, Right becomes Success and Left becomes Failure. Though

collecting the failures by concatenating strings isn't the best idea of course.

Switching to some other Monoid (that's the constraint on the failure type)

isn't too difficult.

fallBack :: (Monad m, Monoid e) => m (Validation e a) -> m (Validation e a) -> m (Validation e a) fallBack first other = do first >>= \case r@(Success _) -> pure r r@(Failure _) -> (r <|>) <$> other

Third attempt: pulling failures out to MonadPlus

After writing the fallBack function I still wanted to explore other solutions.

There's almost always something more out there in the Haskell eco system, right?

So I asked in the #haskell-beginners channel on the Functional Programming

Slack. The way I asked the question resulted in answers that iterates over a

list of actions and cutting at the first success.

The first suggestion had me a little confused at first, but once I re-organised the helper function a little it made more sense to me.

mFromRight :: MonadPlus m => m (Either err res) -> m res mFromRight = (either (const mzero) return =<<)

To use it put the actions in a list, map the helper above, and finally run

asum on it all2. I think it makes it a little clearer what happens if

it's rewritten like this.

firstRightM :: MonadPlus m => [m (Either err res)] -> m res firstRightM = asum . fmap go where go m = m >>= either (const mzero) return

λ> firstRightM [loadFromDisk "bad key", loadFromWeb "good key"] local: bad key web: good key 8 λ> firstRightM [loadFromDisk "good key", undefined] local: good key 8

So far so good, but I left out the case where both fail, because that's sort of the fly in the ointment here

λ> firstRightM [loadFromDisk "bad key", loadFromWeb "bad key"] local: bad key web: bad key *** Exception: user error (mzero)

It's not nice to be back to deal with exceptions, but it's possible to recover,

e.g. by appending <|> pure 0.

λ> firstRightM [loadFromDisk "bad key", loadFromWeb "bad key"] <|> pure 0 local: bad key web: bad key 0

However that removes the ability to deal with the situation where all actions

fail. Not nice! Add to that the difficulty of coming up with a good

MonadPlus instance for an application monad; one basically have to resort to

the same thing as for IO, i.e. to throw an exception. Also not nice!

Fourth attempt: wrapping in ExceptT to get its Alternative behaviour

This was another suggestion from the Slack channel, and it is the one I like the most. Again it was suggested as a way to stop at the first successful action in a list of actions.

firstRightM :: (Foldable t, Functor t, Monad m, Monoid err) => t (m (Either err res)) -> m (Either err res) firstRightM = runExceptT . asum . fmap ExceptT

Which can be used similarly to the previous one. It's also easy to write a

variant of fallBack for it.

fallBack :: (Monad m, Monoid err) => m (Either err res) -> m (Either err res) -> m (Either err res) fallBack first other = runExceptT $ ExceptT first <|> ExceptT other

λ> loadFromDisk "bad key" `fallBack` loadFromWeb "good key" local: bad key web: good key Right 8 λ> loadFromDisk "good key" `fallBack` undefined local: good key Right 8 λ> loadFromDisk "bad key" `fallBack` loadFromWeb "bad key" local: bad key web: bad key Left "no such local key: bad keyno such remote key: bad key"

Yay! This solution has the short-circuiting behaviour I want, as well as collecting all errors on failure.

Conclusion

I'm still a little disappointed that liftA2 (<|>) isn't short-circuiting as I

still think it's the easiest of the approaches. However, it's a problem that one

has to rely on a deprecated instance of Alternative for Either String,

but switching to use Validation would be only a minor change.

Manually writing the fallBack function, as I did in the second attempt,

results in very explicit code which is nice as it often reduces the cognitive

load for the reader. It's a contender, but using the deprecated Alternative

instance is problematic and introducing Validition, an arguably not very

common type, takes away a little of the appeal.

In the end I prefer the fourth attempt. It behaves exactly like I want and even

though ExpectT lives in transformers I feel that it (I pull it in via mtl)

is in such wide use that most Haskell programmers will be familiar with it.

One final thing to add is that the documentation of Validation is an excellent

inspiration when it comes to the behaviour of its instances. I wish that the

documentation of other packages, in particular commonly used ones like base,

transformers, and mtl, would be more like it.

Comments, feedback, and questions

Dustin Sallings

Dustin sent me a comment via email a while ago, it's now March 2022 so it's taken me embarrassingly long to publish it here.

I removed a bit from the beginning of the email as it doesn't relate to this post.

… a thing I've written code for before that I was reasonably pleased with. I have a suite of software for managing my GoPro media which involves doing some metadata extraction from images and video. There will be multiple transcodings of each medium with each that contains the metadata having it completely intact (i.e., low quality encodings do not lose metadata fidelity). I also run this on multiple machines and store a cache of Metadata in S3.

Sometimes, I've already processed the metadata on another machine. Often, I can get it from the lowest quality. Sometimes, there's no metadata at all. The core of my extraction looks like this:

ms <- asum [ Just . BL.toStrict <$> getMetaBlob mid, fv "mp4_low" (fn "low"), fv "high_res_proxy_mp4" (fn "high"), fv "source" (fn "src"), pure Nothing]

The first version grabs the processed blob from S3. The next three fetch (and process) increasingly larger variants of the uploaded media. The last one just gives up and says there's no metadata available (and memoizes that in the local DB and S3).

Some of these objects are in the tens of gigs, and I had a really bad internet connection when I first wrote this software, so I needed it to work.

Footnotes:

I'm not sure if it's a good term to use in this case as Wikipedia says it's for Boolean operators. I hope it's not too far a stretch to use it in this context too.

In the version of base I'm using there is no asum, so I simply copied

the implementation from a later version:

asum :: (Foldable t, Alternative f) => t (f a) -> f a asum = foldr (<|>) empty

Using lens to set a value based on another

I started writing a small tool for work that consumes YAML files and combines

the data into a single YAML file. To be specific it consumes YAML files

containing snippets of service specification for Docker Compose and it produces

a YAML file for use with docker-compose. Besides being useful to me, I thought

it'd also be a good way to get some experience with lens.

The first transformation I wanted to write was one that puts in the correct image name. So, only slightly simplified, it is transforming

panda: x-image: panda goat: x-image: goat tapir: image: incorrent x-image: tapir

into

panda: image: panda:latest x-image: panda goat: image: goat:latest x-image: goat tapir: image: tapir:latest x-image: tapir

That is, it creates a new key/value pair in each object based on the value of

x-image in the same object.

First approach

The first approach I came up with was to traverse the sub-objects and apply a

function that adds the image key.

setImage :: Value -> Value setImage y = y & members %~ setImg where setImg o = o & _Object . at "image" ?~ String (o ^. key "x-image" . _String <> ":latest")

It did make me wonder if this kind of problem, setting a value based on another value, isn't so common that there's a nicer solution to it. Perhaps coded up in a combinator that isn't mentioned in Optics By Example (or mabye I've forgot it was mentioned). That lead me to ask around a bit, which leads to approach two.

Second approach

Arguably there isn't much difference, it's still traversing the sub-objects and

applying a function. The function makes use of view being run in a monad and

ASetter being defined with Identity (a monad).

setImage' :: Value -> Value setImage' y = y & members . _Object %~ (set (at "image") . (_Just . _String %~ (<> ":latest")) =<< view (at "x-image"))

I haven't made up my mind on whether I like this better than the first. It's disappointingly similar to the first one.

Third approach

Then I it might be nice to split the fetching of x-image values from the

addition of image key/value pairs. By extracting with an index it's possible

to keep track of what sub-object each x-image value comes from. Then two steps

can be combined using foldl.

setImage'' :: Value -> Value setImage'' y = foldl setOne y vals where vals = y ^@.. members <. key "x-image" . _String setOne y' (objKey, value) = y' & key objKey . _Object . at "image" ?~ String (value <> ":latest")

I'm not convinced though. I guess I'm still holding out for a brilliant combinator that fits my problem perfectly.

Please point me to "the perfect solution" if you have one, or if you just have some general tips on optics that would make my code clearer, or shorter, or more elegant, or maybe just more lens-y.

The timeout manager exception

The other day I bumped the dependencies of a Haskell project at work and noticed a new exception being thrown:

Thread killed by timeout manager

After a couple of false starts (it wasn't the connection pool, nor was it servant) I realised that a better approach would be to look at the list of packages that were updated as part of the dependency bumping.1 Most of them I thought would be very unlikely sources of it, but two in the list stood out:

| Package | Pre | Post |

|---|---|---|

| unliftio | 0.2.14 | 0.2.18 |

| warp | 3.3.15 | 3.3.16 |

warp since the exception seemed to be thrown shortly after handling an HTTP

request, and unliftio since the exception was caught by the handler for